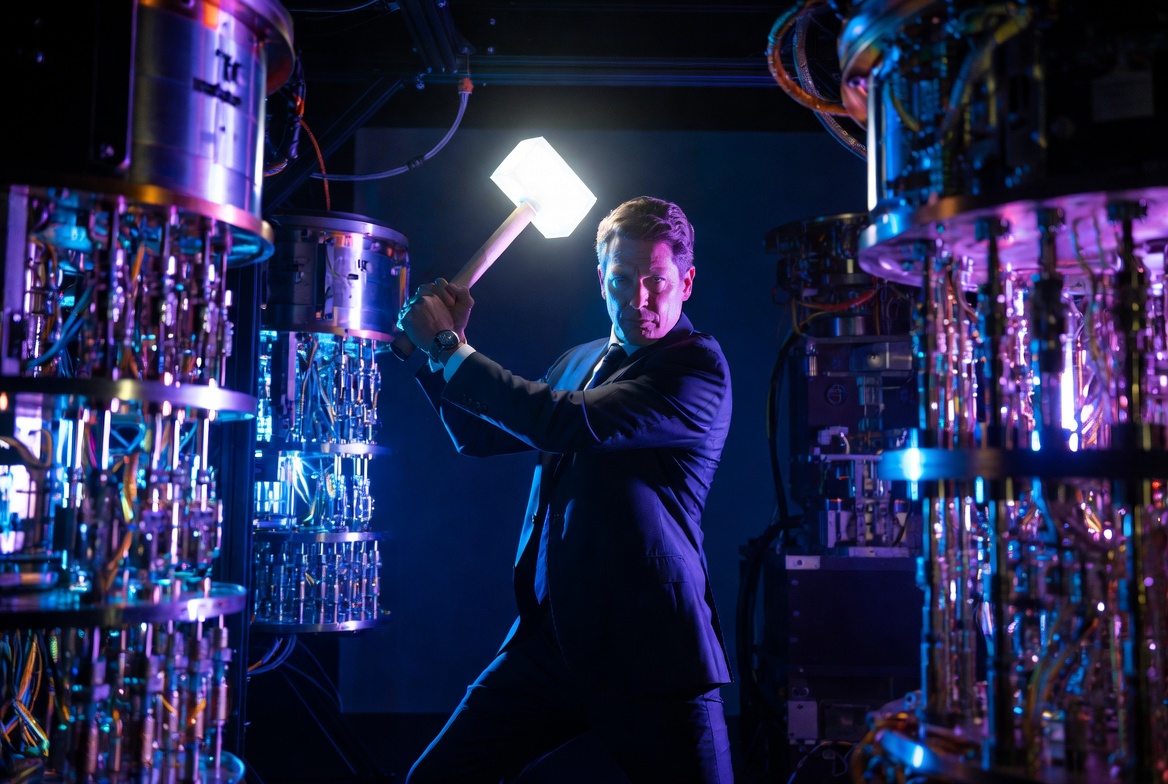

Quantum Computing vs GPUs: Pat Gelsinger Predicts the End of Nvidia’s AI Dominance

Artificial Intelligence

Sometimes a single bold statement can shake an entire industry—and former Intel CEO Pat Gelsinger has just delivered one. According to Gelsinger, quantum computing could make GPUs obsolete within the next decade, potentially ending Nvidia’s current AI dominance. While Nvidia’s H100, B200, and Blackwell chips are driving a global AI boom, Gelsinger believes a “quantum breakthrough” is coming fast—and it could collapse the GPU-driven AI market far sooner than expected.

Pat Gelsinger’s Quantum Warning: A Disruption Incoming

Now working with the venture firm Playground Global, Gelsinger has access to cutting-edge quantum labs, start-ups, and real Qubit research—not just theoretical concepts. In an interview with the Financial Times, he described a technological “holy trinity”:

Classical computing + AI computing + quantum computing.

And in his view, the latter is on track to dominate the rest.

In simple terms:

He believes GPUs—like Nvidia’s high-priced AI accelerators—could become irrelevant in less than 10 years.

This is more than a criticism; it’s a direct challenge to Nvidia CEO Jensen Huang and the entire GPU-centered AI ecosystem.

Why Gelsinger Thinks GPUs Will Fall

- Exclusive access to quantum development: His role puts him close to real quantum hardware innovation, not simulations.

- AI bubble warning: He sees signs of a hype bubble similar to past tech booms—sky-high valuations with uncertain returns.

- Historical parallels: He compares today’s landscape to the IBM–Microsoft power shift in the 1990s, suggesting OpenAI and Microsoft could face a similar fate if the balance of power shifts again.

Nvidia Responds: Quantum Is Still 20 Years Away

Meanwhile, Jensen Huang remains confident. He claims quantum computing won’t be relevant for at least 20 years. With Nvidia controlling the world’s most important AI infrastructure—from GPUs to CUDA to server systems—Huang has little reason to panic.

But Gelsinger disagrees:

“Two years, not twenty,” he says.

A quantum breakthrough could hit much sooner than the industry expects.

The Reality Check: Quantum Computing Isn’t Ready… Yet

Despite the hype, practical quantum computing still faces massive challenges before it can replace GPUs or classical AI hardware.

Key limitations slowing quantum adoption

- Extreme instability: Qubits are fragile and error-prone, requiring heavy correction systems.

- Algorithm compatibility: Only certain problems benefit from quantum acceleration—many AI models still run faster on classical silicon.

- Missing ecosystem: Quantum compilers, frameworks, and developer tools are still extremely immature.

In short, for quantum computing to replace GPUs in AI, the entire ecosystem—from hardware to software—would need to evolve at an unprecedented speed.

Visionary Insight or Just Another Tech Hype?

Gelsinger also admits his own failures from his Intel era, describing the company as “technically rotten” with persistent delays and failed projects, including the ill-fated 18A process node. His candid criticism adds weight but also raises questions about motives.

Is Gelsinger predicting the future—or trying to provoke the industry?

- Best case: He’s a visionary warning the world about a coming quantum revolution.

- Worst case: It’s an over-ambitious story meant to stir debate in a crowded AI landscape.

Still, he highlights a truth often ignored:

Technology doesn’t follow marketing roadmaps.

Anyone betting everything on GPUs today may face a multi-billion-dollar problem tomorrow if quantum computing truly reaches escape velocity.

Final Verdict: Quantum Shockwave or GPU Hype Check?

Quantum computing absolutely has world-changing potential—but its timeline remains uncertain. For now, Nvidia rules the AI era. But if Gelsinger is right, the next giant leap in computing may arrive far sooner than Jensen Huang or the industry is prepared for.

Until then, as Gelsinger implies:

If you’re going to talk big, you’d better have some qubits ready to back it up.